Acceptance testing is a critical phase in software development, particularly for projects involving AI code generation. As AI systems increasingly take on complex tasks, ensuring their output meets predefined standards becomes crucial. In AI code generation projects, operational performance (OP) acceptance testing helps verify that the generated code is not only functional but also meets quality, security, and maintainability standards. This article explores best practices for conducting OP acceptance testing in AI code generation projects.

Understanding OP Acceptance Testing

Operational Performance (OP) acceptance testing evaluates how well the AI-generated code performs in real-world scenarios. It ensures that the code is not only syntactically correct but also aligns with performance expectations and business requirements. OP testing typically includes evaluating the code’s efficiency, scalability, maintainability, and compliance with industry standards.

Key Best Practices

1. Define Clear Acceptance Criteria

Before initiating OP acceptance testing, establish clear and comprehensive acceptance criteria. These criteria should outline the expected performance metrics, functional requirements, and quality standards for the generated code. Engage stakeholders, including developers, testers, and end-users, to ensure that the criteria reflect all necessary aspects of the project.

Example Criteria:

Performance: Execution time, memory usage, and responsiveness.

Quality: Code readability, adherence to coding standards, and absence of bugs.

Security: Compliance with security guidelines and protection against vulnerabilities.

Scalability: Ability to handle increasing loads or data volumes.

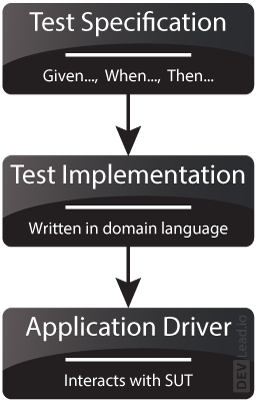

2. Develop a Robust Testing Framework

A robust testing framework is essential for systematic OP acceptance testing. This framework should include various testing methodologies and tools to cover different aspects of the generated code. Common testing types include:

Unit Testing: Verifies individual components or functions.

Integration Testing: Checks the interactions between integrated components.

System Testing: Evaluates the overall functionality and performance of the system.

read this : Assesses how the system performs under high loads or stress conditions.

Security Testing: Identifies potential security vulnerabilities.

Use automated testing tools where possible to streamline the process and ensure consistency.

3. Incorporate Real-World Scenarios

Testing AI-generated code in real-world scenarios provides valuable insights into its performance and usability. Simulate realistic use cases and data inputs to evaluate how the code performs under typical operating conditions. This approach helps identify potential issues that may not be apparent in controlled test environments.

Example Scenarios:

For a web application, test the code with different browsers, devices, and network conditions.

For a data processing application, use large datasets and various data formats.

4. Conduct Comprehensive Code Reviews

Code reviews are a vital part of ensuring code quality. Engage experienced developers and domain experts to review the AI-generated code for adherence to coding standards, readability, and maintainability. Reviews can help identify potential issues early and provide recommendations for improvements.

Review Focus Areas:

Code Structure: Ensure logical organization and modular design.

Documentation: Verify that the code is well-documented with comments and explanations.

Error Handling: Check for proper error handling and recovery mechanisms.

5. Monitor and Analyze Performance Metrics

Monitoring performance metrics during acceptance testing is crucial for evaluating the efficiency and effectiveness of the AI-generated code. Collect and analyze data on execution time, memory usage, and response times to ensure the code meets performance expectations.

Performance Metrics to Track:

Execution Time: Time taken for the code to complete a specific task.

Memory Usage: Amount of memory consumed by the code during execution.

Response Time: Time taken to respond to user inputs or requests.

Use performance monitoring tools to automate data collection and analysis.

6. Implement Continuous Integration and Continuous Deployment (CI/CD)

CI/CD practices enhance the efficiency and effectiveness of OP acceptance testing. Integrate automated testing into the CI/CD pipeline to ensure that code changes are continuously tested and validated. This approach helps identify and address issues early in the development process, reducing the risk of defects in production.

CI/CD Practices:

Automated Testing: Run tests automatically with each code commit or change.

Continuous Integration: Regularly merge code changes into a shared repository and test them.

Continuous Deployment: Automatically deploy and test code in staging or production environments.

7. Engage Stakeholders Throughout the Process

Engaging stakeholders throughout the OP acceptance testing process ensures that the generated code aligns with business requirements and user expectations. Involve end-users, project managers, and other relevant parties in testing activities and gather their feedback to refine the code and address any concerns.

Stakeholder Engagement Strategies:

Regular Updates: Provide stakeholders with regular updates on testing progress and results.

Feedback Sessions: Organize sessions to gather feedback and discuss potential improvements.

User Testing: Involve end-users in testing to ensure the code meets their needs and expectations.

8. Document and Report Test Results

Documenting and reporting test results is essential for tracking the effectiveness of OP acceptance testing and communicating findings to stakeholders. Create detailed reports that include test cases, execution results, identified issues, and recommendations for improvements.

Reporting Elements:

Test Summary: Overview of test activities and results.

Issue Log: Detailed information on identified issues and their severity.

Recommendations: Suggestions for addressing issues and enhancing code quality.

9. Continuously Improve Testing Practices

Continuous improvement is key to maintaining effective OP acceptance testing practices. Regularly review and refine testing processes, methodologies, and tools based on feedback and lessons learned. Stay updated with industry trends and best practices to enhance the quality and efficiency of your testing efforts.

Improvement Strategies:

Post-Test Reviews: Conduct reviews to assess the effectiveness of testing practices.

Training: Provide ongoing training for testing teams to stay updated with new tools and techniques.

Feedback Loops: Implement feedback loops to gather input from stakeholders and testing teams.

Conclusion

OP acceptance testing in AI code generation projects is crucial for ensuring that the generated code meets performance, quality, and security standards. By defining clear acceptance criteria, developing a robust testing framework, incorporating real-world scenarios, conducting comprehensive code reviews, monitoring performance metrics, and engaging stakeholders, you can enhance the effectiveness of your testing efforts. Implementing CI/CD practices, documenting and reporting test results, and continuously improving testing practices will further contribute to the success of your AI code generation projects. Following these best practices will help ensure that the AI-generated code is reliable, efficient, and aligned with project goals.