Artificial Intelligence (AI) code generators are changing the software growth landscape by automating the generation involving code, reducing growth time, and lessening human error. Nevertheless, ensuring the high quality and reliability of code made by these kinds of AI systems presents unique challenges. Constant testing (CT) in this context becomes crucial but likewise introduces complexities not necessarily typically encountered inside traditional software tests. This article explores typically the key challenges throughout continuous testing for AI code generator and proposes options to address all of them.

Challenges in Constant Testing for AI Code Generators

Unpredictability and Variability of AI Output

Obstacle: AI code generator can produce various outputs for typically the same input due to their probabilistic nature. This specific variability makes that challenging to predict in addition to validate the created code consistently.

Option: Implement a extensive test suite that covers an array of situations. Utilize techniques just like snapshot testing to compare new results against previously validated snapshots to detect unexpected changes.

Difficulty of Generated Signal

Challenge: The code generated by AJE can be highly complex and integrate various programming paradigms and structures. This specific complexity makes that challenging to ensure total test coverage in addition to identify subtle pests.

Solution: Employ superior static analysis equipment to analyze typically the generated code intended for potential issues. Blend static analysis with dynamic testing methods to capture a more comprehensive set regarding errors and border cases.

Insufficient Man Intuition in Computer code Evaluation

Challenge: Individual developers count on intuition and experience to be able to identify code odours and potential problems, which AI lacks. This absence can lead to the AI making code that is technically correct nevertheless not optimal or perhaps maintainable.

Solution: Boost AI-generated code using human code evaluations. Incorporate feedback loops where human builders review and refine the generated code, providing valuable observations that can always be used to improve typically the AI’s performance more than time.

Integration together with Existing Development Work flow

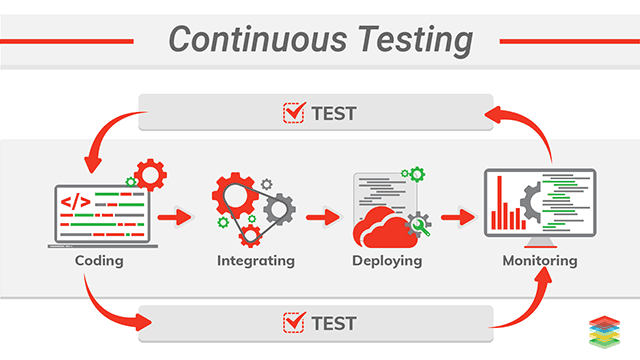

Challenge: Integrating AJE code generators in to existing continuous integration/continuous deployment (CI/CD) sewerlines can be complex. Ensuring seamless incorporation without disrupting recognized workflows is essential.

Solution: Develop do it yourself and flexible integration strategies that permit AI code power generators to plug in to existing CI/CD pipelines. Use containerization plus orchestration tools such as Docker and Kubernetes to manage the particular integration efficiently.

Scalability of Testing System

Challenge: Continuous testing of AI-generated program code requires significant computational resources, in particular when testing across diverse conditions and configurations. Scalability becomes a critical concern.

Solution: Leveraging cloud-based testing systems that can level dynamically based in demand. Implement seite an seite testing to expedite the testing process, ensuring that solutions are used efficiently without compromising on exhaustiveness.

Handling of Non-Deterministic Behavior

Challenge: AJE code generators may exhibit non-deterministic conduct, producing different results on different operates for the similar input. This particular behavior complicates the process of assessment and validation.

Answer: Adopt deterministic algorithms where possible plus limit the sources of randomness in typically the code generation procedure. When click now -deterministic behaviour is unavoidable, use statistical analysis to be able to understand and are the cause of variability.

Maintaining Up dated Test Cases

Obstacle: As AI signal generators evolve, test out cases must become continually updated to reflect changes within the generated code’s structure and functionality. Keeping test situations relevant and complete is a regular challenge.

Solution: Apply automated test circumstance generation tools of which can create in addition to update test instances based on typically the latest version in the AI code electrical generator. Regularly review and prune outdated test cases to preserve a powerful and powerful test suite.

Solutions and Best Techniques

Automated Regression Tests

Regularly run regression tests to make sure that fresh changes or updates to the AJE code generator tend not to introduce new bugs. This practice helps in maintaining the trustworthiness of the produced code over time.

Feedback Loop Systems

Establish feedback coils where developers can easily provide input around the quality and efficiency of the created code. This feedback enables you to refine and even increase the AI designs continuously.

Comprehensive Visiting and Monitoring

Carry out robust logging and monitoring systems to be able to track the performance and behavior of AI-generated code. Wood logs can provide valuable insights into issues and help inside diagnosing and fixing problems more efficiently.

Cross types Testing Methods

Mix various testing methodologies, such as device testing, integration assessment, and system screening, to cover different aspects with the developed code. Hybrid methods ensure a much more detailed evaluation and affirmation process.

Collaborative Advancement Environments

Foster a collaborative environment in which AI and man developers work together. Tools and platforms that facilitate collaboration can enhance the overall quality of the particular generated code and the testing processes.

Continuous Learning and even Adaptation

Make sure that the AI models employed for code era are continuously studying and adapting according to new data plus feedback. Continuous enhancement helps in maintaining the AI designs aligned using the newest coding standards and even practices.

Security Testing

Incorporate security screening into the continuous testing framework to recognize and mitigate potential vulnerabilities in the particular generated code. Computerized security scanners and even penetration testing equipment can be used to enhance safety.

Documentation and Understanding Discussing

Maintain complete documentation of the particular testing processes, resources, and techniques utilized. Knowledge sharing within the development plus testing teams can result in better understanding and much more effective testing techniques.

Conclusion

Continuous assessment for AI computer code generators is some sort of multifaceted challenge of which requires different classic and innovative tests approaches. By responding to the inherent unpredictability, complexity, and incorporation challenges, organizations may ensure the trustworthiness and quality involving AI-generated code. Employing the proposed remedies and best procedures will help in generating a robust continuous testing framework that adapts to the changing landscape of AI-driven software development. Since AI code generation devices become more superior, continuous testing will play a critical position in harnessing their potential while sustaining high standards regarding code quality and even reliability.